Oracle Database Architecture Diagram

User Process: Whenever a user starts an application program (such as a Pro*C program) or an Oracle tool (such as Oracle Enterprise Manager or SQL*Plus), Oracle Database creates a user process to run the user's application.

Server Process: Server process is the process which work on behalf of user process on the server side. Since user process can not work on server side, it is server process which work on behalf of user process.

Listener: Listener is the service which accepts incoming credentials from users and helps in establishing connection with the server. Listener always runs on the server side. Listener's role is only until a connection is established. Once connection is established and listener goes down there wont be any problem on the database side. Only new connections wont be established but the existing connections will remain unaffected.

PGA(PROGRAM GLOBAL AREA OR PRIVATE GLOBAL AREA): Oracle creates a program global area for each user when the user starts a session. This area holds data and control information for the dedicated server process that Oracle creates for each individual

user. Unlike the SGA, the PGA is for the exclusive use of each server process and can’t be shared bymultiple processes. A session’s logon information and persistent information, such as bind variable information and data type conversions, are still a part of the SGA, unless you’re using a shared server configuration, but the runtime area used while SQL statements are executing is located in the PGA.

For example, a user’s process may have some cursors (which are handles to memory areas

where you store the values for variables) associated with it. Because these are the user’s cursors, they are not automatically shared with other users and so the PGA is a good place to save those private values. Another major use of the PGA is for performing memory-intensive SQL operations that involve sorting, such as queries involving ORDER BY and GROUP BY clauses. These sort operations need a working area, and the PGA provides that memory area.

You can classify the PGA memory into the following types:

• Private SQL area: This area of memory holds SQL variable bind information and runtime

memory structures. Each session that executes a SQL statement will have its own private SQL area.

• Runtime area: The runtime area is created for a user session when the session issues a SELECT, INSERT, UPDATE, or DELETE statement. After an INSERT, DELETE, or UPDATE statement is run, or after the output of a SELECT statement is fetched, the runtime area is freed by Oracle.

If a user’s session uses complex joins or heavy sorting (grouping and ordering) operations, the session uses the runtime area to perform all those memory-intensive operations.

Instance: Instance is the means through which a user communiate with the database. It is made up of memory cache(SGA) and background processes.

A database is made up of physical files and a user can not directly access the database. So there is instance through which a user access the database. When instance is down then user can not communicate with the database.

We often say database is down but database can never go down, it is instance which goes down.

SGA(Shared Global Area or System global area): SGA is the most important memory component in an Oracle instance. SGA is made up of several memory caches. SGA’s main purpose is to speed up query performance and to enable a high amount of concurrent database activity. Because processing in memory is much faster than disk I/O. SGA is shared by all the users in the database

.

When we start an instance in Oracle, the instance takes a certain amount of memory from the operating system’s RAM—the amount is based on the size of the SGA component that we define in the initialization parameter file of the database. When the instance is shut down, the memory used by the SGA goes back to the host system i.e to RAM.

We use two parameters to define SGA size in parameter file, SGA_TARGET and SGA_MAX_SIZE.

The component of SGA are:

1. Shared pool

2. Database buffer cache

3. Log buffer cache

4. Large pool

5. Java pool

6. Stream pool

Shared Pool: Shared pool is very important component of SGA. The shared pool holds executable PL/SQL code and SQL statements, as well as information regarding the data dictionary tables. The data dictionary is a set of key tables that oracle maintains, and it contains crucial metadata about the database tables, users, privileges, and so forth.

Proper sizing of the shared pool area benefits us in a couple of ways. First, response times will be better because we’re reducing processing time—if we don’t have to recompile the same Oracle code each time a user executes a query, so we save time. Oracle will reuse the previously compiled code if it encounters the same code again. Second, more users can use the system because the reuse of code makes it possible for the database to serve more users with the same resources. Both the I/O rates and the CPU usage will diminish when the database uses its shared pool memory effectively.

We use SHARED_POOL_SIZE parameter to define its parameter in the parameter file.

There are two component of shared pool

1. Library Cache

2. Data Dictionary Cache

Library Cache: Parsing is done in the library cahche. It is the memory cache where all the SQL and PL/SQL parsed.

Oracle stores all compiled SQL statements in the library cache component of the shared pool. The library cache component of the shared pool memory is shared by all users of the database. Each time we issue a SQL statement, Oracle first checks the library cache to see if there is an already parsed and ready-to-execute form of the statement in there. If there is, Oracle uses the library cache version, reducing the processing time considerably—this is called a soft parse.

If Oracle doesn’t find an execution-ready version of the SQL code in the library cache, the

executable has to be built fresh—this is called a hard parse. Oracle uses the library cache part of the shared pool memory for storing newly parsed code. If there isn’t enough free memory in the shared pool, Oracle will flush older code from the shared pool to make room for your new code.

We can not resize library cache, to achive this purpose we resize the shared pool.

Data Dictionary Cache: The data dictionary cache component of the shared pool primarily contains object definitions, usernames, roles, privileges, and other such information. When we run a segment of SQL code, Oracle first has to ascertain whether we have the privileges to perform the planned operation. It checks the data dictionary cache to see whether the pertinent information is there, and if not, Oracle has to read the information from the data dictionary into the data dictionary cache. Obviously, the more often we find the necessary information in the cache, the shorter the processing time. In general, a data dictionary cache miss, which occurs when Oracle doesn’t find the information it needs in the cache,

tends to be more expensive than a library cache miss.

There is no direct way to adjust the data dictionary cache size. You can only increase or decrease the entire shared pool size.

Database buffer cache:The database buffer cache consists of the memory buffers that Oracle uses to hold the data read by the server process from datafiles on disk in response to user requests. Buffer cache access is, of course, much faster than reading the data from disk storage. When the users modify data, those changes are made in the database buffer cache as well. The buffer cache thus contains both the original blocks read from disk and the changed blocks that have to be written back to disk.

You can group the memory buffers in the database buffer cache into three components:

• Free buffers: These are buffers that do not contain any useful data, and, thus, the database can reuse them to hold new data it reads from disk.

• Dirty buffers: These contain data that was read from disk and then modified, but hasn’t yet been written to the datafiles on disk.

• Pinned buffers: These are data buffers that are currently in active use by user sessions.

When a user process requests data, Oracle will first check whether the data is already available in the buffer cache. If it is, the server process will read the data from the SGA directly and send it to the user. If the data isn’t found in the buffer cache, the server process will read the relevant data from the datafiles on disk and cache it in the database buffer cache. Of course, there must be free buffers available in the buffer cache for the data to be read into them. If the server process can’t find a free buffer after searching through a threshold number of buffers, it asks the database writer process to write some of the dirty buffers to disk, thus freeing them up for writing the new data it wants to read into the buffer cache.

Oracle maintains an LRU list of all free, pinned, and dirty buffers in memory. It’s the database writer process’s job to write the dirty buffers back to disk to make sure there are free buffers available in the database buffer cache at all times. To determine which dirty blocks get written to disk, Oracle uses a modified LRU algorithm, which ensures that only the most recently accessed data is retained in the buffer cache. Writing data that isn’t being currently requested to disk enhances the performance of the database.

The larger the buffer cache, the fewer the disk reads and writes needed and the better the performance of the database. Therefore, properly sizing the buffer cache is very important for the proper performance of your database. Of course, simply assigning an extremely large buffer cache can hurt performance, because you may end up taking more memory than necessary and causing paging and swapping on your server.

Redo Log Buffer Cache: Whenever a single atomic change in the database, redo is generated. And this redo is recored in redo log buffer cache. Usually the size of the redo log buffer cache is very less as compared to other memory cache in the so that it gets flushed very frequntly and redo is written in the online redo log file by log writter.

The log writer process writes the contents of the redo log buffer to disk under any of the following circumstances:

• The redo log buffer is one-third full.

• Users commit a transaction.

• The database buffer cache is running low on free space and needs to write changed data

to the redo log. The database writer instructs the log writer process to flush the log buffer’s

contents to disk to make room for the new data.

Oracle provides an option called nologging that bypass the redo logs almost completely and thus avoid contention during certain operations (such as a large data load). We can also batch the commits in a long job, thus enabling the log writer process to more efficiently write the redo log entries.

We use the LOG_BUFFER initialization parameter to set the size of the redo log buffer.

Large_pool: The large pool is a purely optional memory pool, and Oracle manages it quite differently from the shared pool. We only need to configure the large pool if we’re using parallel queries in the database.

Oracle also recommends configuring this pool if we’re using RMAN or the shared server

configuration instead of the default dedicated server configuration. We set the size of this pool in the initialization file by using the LARGE_POOL_SIZE parameter. The large pool memory component is important if we’re using the shared server architecture.

Java_pool: The Java pool (set by using the JAVA_POOL_SIZE parameter) is designed for databases that contain a lot of Java code, so that the regular SGA doesn’t have to be allocated to components using Java-based objects. Java pool memory is reserved for the Java Virtual Machine (JVM) and for your Java-based applications. If we’re deploying Enterprise JavaBeans or using CORBA, we could potentially need a Java pool size greater than 1GB.

Stream Pool: Oracle Streams is a technology for enabling data sharing among different databases and among different application environments. The Streams pool is the memory allocated to support Streams activity in your instance. If we manually set the Streams pool component by using the STREAMS_POOL_ SIZE initialization parameter, memory for this pool is transferred from the buffer cache after the first use of Streams. If we use automatic shared memory management (discussed next), the memory for the Streams pool comes from the global SGA pool. The amount transferred is up to 10 percent of the shared pool size.

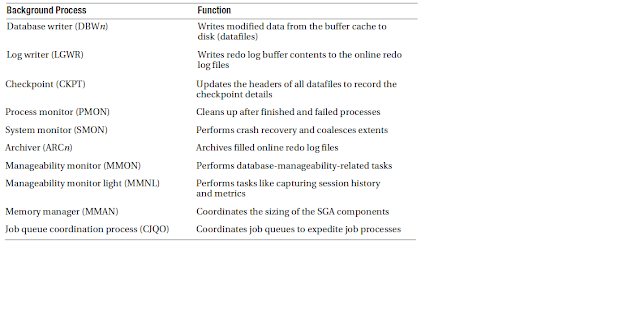

Background process: The background processes are the real workhorses of the Oracle instance—they enable large numbers of users to concurrently and efficiently use information stored in database files. Oracle creates these processes automatically when you start an instance, and by being continuously hooked into the operating system, these processes relieve the Oracle software from having to repeatedly start numerous, separate processes for the various tasks that need to be done on the operating system’s server. Each of the Oracle background processes is in charge of a separate task, thus increasing the efficiency of the database instance. These processes are automatically created by Oracle when you start the database instance, and they terminate when the database is shut down.

I briefly discuss the main Oracle background processes in the following sections.

Database Writer: Oracle doesn’t modify data directly on the disks—all modifications of data take place in Oracle memory. The database writer process is then responsible for writing the “dirty” (modified) data from the memory areas known as database buffers to the actual datafiles on disk.

It is the DBWn process’s job to monitor the use of the database buffer cache, and if the free space in the database buffers is getting low, the database writer process makes room available by writing some of the data in the buffers to the disk files. The database writer process uses the least recently used (LRU) algorithm (or a modified version of it), which retains data in the memory buffers based on how long it has been since someone asked for that data. If a piece of data has been requested very recently, it’s more likely to be retained in the memory buffers.

The database writer process writes dirty buffers to disk under the following conditions:

1. When the database issues a checkpoint

2. When a server process can’t find a clean reusable buffer after checking a threshold number of buffers

3. Every 3 seconds

For very large databases or for databases performing intensive operations, a single database

writer process may be inadequate to perform all the writing to the database files. Oracle provides for the use of multiple database writer processes to share heavy data modification workloads. You can have a maximum of 20 database writer processes (DBW0 through DBW9, and DBWa through DBWj). Oracle recommends using multiple database writer processes, provided you have multiple processors.

You can specify the additional database writer processes by using the DB_WRITER_PROCESSES initialization parameter in the SPFILE Oracle configuration file. If you don’t specify this parameter, Oracle allocates the number of database writer processes based on the number of CPUs and processor groups on your server. For example, on my 32-processor HP-UX server, the default is four database writers (one database writer per eight processors), and in another 16-processor server, the default is two database writers.

Oracle further recommends that you first ensure that your system is using asynchronous I/O

before deploying additional database writer processes beyond the default number—you may not need multiple database writer processes if so. (Even when a system is capable of asynchronous I/O, that feature may not be enabled.) If your database writer can’t keep up with the amount of work even after asynchronous I/O is enabled, you should consider increasing the number of database writers.

Log Writer: The job of the log writer process is to transfer the contents of the redo log buffer to disk. Whenever you make a change to a database table (whether an insertion, update, or deletion), Oracle writes the committed and uncommitted changes to a redo log buffer (memory buffer). The LGWR process then transfers these changes from the redo log buffer to the redo log files on disk. The log writer writes a commit record to the redo log buffer and writes it to the redo log on disk immediately, whenever a user commits a transaction.

If you’ve multiplexed the redo log (as you’re supposed to!), the log writer will write the contents of the redo log buffer to all members of the redo log group. If one or more members are damaged or otherwise unavailable, the log writer will just write to the available members of a group. If it can’t write to even one member of a redo log group, the log writer signals an error. Each time the log writer writes to the redo log on disk, it transfers all the new redo log entries that arrived in the buffer sincethe log writer last copied the buffer contents to the redo log.

The log writer writes all redo log buffer entries to the redo logs under the following

circumstances:

• Every 3 seconds.

• When the redo log buffer is one-third full.

• When the database writer signals that redo records need to be written to disk. Under Oracle’s write-ahead protocol, all redo records associated with changes in the block buffers must be written to disk (that is, to the redo log files on disk) before the datafiles on disk can be modified. While writing dirty buffers from the buffer cache to the storage disks, if the database writer discovers that certain redo information has not been written to the redo log files, it signals the log writer to first write that information, so it can write its own data to disk.

• In addition, as mentioned earlier, the log writer writes a commit record to the redo log

following the committing of each transaction. The redo log files, as you learned earlier, are

vital during the recovery of an Oracle database from a lost or damaged disk. Before the database writer writes the changed data to disk, it ensures that the log writer has

already completed writing all redo records for the changed data from the log buffer to the redo logs on disk. This is called the write-ahead protocol.

When you issue a commit statement to make your changes permanent, the log writer first places a commit record in the redo log buffer and immediately writes that record to the redo log, along with the redo entries pertaining to the committed transaction. The writing of the transaction’s commit record to the redo log is the critical event that marks the committing of the transaction. Each committed transaction is assigned a system change number, which the log writer records in the redo log. The database makes use of these SCNs during a database recovery. The database waits to change the data blocks on disk until a more opportune time and returns a success code indicating the successful committing of the transaction, although the changed data buffers haven’t yet been copied to the datafiles on disk. This technique of indicating a successful commit ahead of the actual writing

of the changed data blocks to disk is called the fast commit mechanism. The redo log files may contain both committed as well as uncommitted transaction records, because of the way the log writer records redo records in the redo logs on disk. If the database needs

buffer space, the log writer may also write the redo log entries to the redo log files from the redo log buffer even before it commits a transaction. Of course, the database ensures that these entries are written to the datafiles only if the transaction is committed later on.

Checkpoint: The checkpoint process is charged with telling the database writer process when to write the dirty data in the memory buffers to disk. After telling the database writer process to write the changeddata, the CKPT process updates the datafile headers and the control file to record the checkpoint details, including the time when the checkpoint was performed. The purpose of the checkpoint process is to synchronize the buffer cache information with the information on the database disks. Each checkpoint record consists of a list of all active transactions and the address of the most recent log record for those transactions. A checkpointing process involves the following steps:

1. Flushing the contents of the redo log buffers to the redo log files

2. Writing a checkpoint record to the redo log file

3. Flushing the contents of the database buffer cache to disk

4. Updating the datafile headers and the control files after the checkpoint completes

There is a close connection between how often Oracle performs the checkpointing operation and the recovery time after a database crash. Because database writer processes write all modified blocks to disk at checkpoints, the more frequent the checkpoints, the less data will need to be recovered when the instance crashes. However, checkpointing involves an overhead cost. Oracle lets you configure the database for automatic checkpoint tuning, whereby the database server tries to write out the dirty buffers in the most efficient way possible, with the least amount of adverse impact on throughput and performance. If you use automatic checkpoint tuning, you don’t have to set any checkpoint-related parameters.

Process Monitor: When user processes fail, the process monitor process cleans up after them, ensuring that the database frees up the resources that the dead processes were using. For example, when a user process dies while holding certain table locks, the PMON process releases those locks so other users can use the tables without any interference from the dead process. In addition, the PMON process restarts failed server processes (in a shared server architecture) and dispatcher processes. The PMON process sleeps most of the time, waking up at regular intervals to see if it is needed. Other processes will also wake up the PMON process if necessary.

The PMON process automatically performs dynamic service registration. When you create a

new database instance, the PMON process registers the instance information with the listener, which is the entity that manages requests for database connections (Chapter 11 discusses the listener in detail). This dynamic service registration eliminates the need to register the new service informationm in the listener.ora file, which is the configuration file for the listener.

System Monitor:The system monitor process, as its name indicates, performs system-monitoring tasks for the Oracle instance, such as these:

• Upon restarting an instance that crashed, SMON determines whether the database is consistent.

• SMON coalesces free extents if you use locally managed tablespaces, which enables you to assign larger contiguous free areas on disk to your database objects.

• SMON cleans up unnecessary temporary segments.

Like the PMON process, the SMON process sleeps most of the time, waking up to see if it is

needed. Other processes will also wake up the SMON process if they detect a need for it.

Datafiles: Oracle datafiles make up the largest part of the physical storage of the database. A datafile can belong to only one database, and one or more datafiles constitute the logical entity called the tablespace. Oracle datafiles constitute most of a database’s total space.

When the database instance needs to read table or index data, it reads that from the datafiles on disk, unless that data is already cached in Oracle’s memory. Similarly, the database writes new table or index data or updates to existing data to the datafiles on disk for permanent storage.

Control File: The control file is a file that the Oracle DBMS maintains to manage the state of the database, and it is probably the single most important file in the Oracle database. Every database has one control file, but due to the file’s importance, multiple identical copies (usually three) are maintained—when the database writes to the control file, all copies of the file get written to. The control file is critical to the functioning of the database, and recovery is difficult without access to an up-to-date control file. Oracle creates the control file (and the copies) during the initial database creation process.

The control file contains the names and locations of the datafiles, redo log files, current log

sequence numbers, backup set details, and the all-important system change number (SCN), which indicates the most recent version of committed changes in the database—information that is not accessible by users even for reading purposes. Only Oracle can write information to the control file, and the Oracle server process continually updates the control file during the operation of the database.

Control files are vital when the Oracle instance is operating. When you turn the instance on,

Oracle reads the control file for the location of the data and log files. During the normal operation of the database, the database consults the control file periodically for necessary information regarding virtually every structure of the database.

The control file is also important in verifying the integrity of the database and when recovering the database. The checkpoint process instructs the database writer to write data to the disk when some specific conditions are met, and the control file notes all checkpoint information from the online redo log files. This information comes in handy during a recovery—the checkpoint information in the control file enables Oracle to decide how far back it needs to go in recovering data from the online redo log files. The checkpoint indicates the SCN up to which the datafiles are already written to the datafiles, so the recovery process will disregard all the information in the online redo log files before the checkpoint noted in the control file.

When you start an Oracle instance, it consults the control file first, to identify all the datafiles and the redo log files that must be opened for database operations.

Redo Log Files: The redo log files record all the changes made to the database, and they are vital during the recovery of a database. If you need to restore your database from a backup, you can recover the latest changes made to the database from the redo log files. The set of redo log files that are currently being used to record the changes to the database are called online redo log files. These logs can be archived (copied) to a different location before being reused, and the saved logs are called archived redo logs.

Oracle writes all final changes made to data (committed data) first to the redo log files, before applying those changes to the actual datafiles themselves. Thus, if a system failure prevents these data changes from being written to the permanent datafiles, Oracle will use the redo logs to recover all transactions that committed but couldn’t be applied to the datafiles. Thus, redo log files guarantee that no committed data is ever lost. If you have all the archived redo logs since the last database backup, and a set of the current redo logs as well, you can always bring a database up to date.

SPFILE :When you create a new database, you specify the initialization parameters for the Oracle instance in a special configuration file called the server parameter file, or SPFILE. You can also use an older version of the configuration file called the init.ora file, but Oracle recommends the use of the more sophisticated SPFILE. In the SPFILE, you specify the memory limits for the instance, the locations of the control files, whether and where the archived logs are stored, and other settings that determine the behavior of the Oracle database server. You can’t, however, edit the SPFILE manually, as you could the init.ora file, since the SPFILE is a binary file.

The SPFILE is always stored on the database server, thus preventing the proliferation of parameter files that sometimes occurs with the use of the init.ora file. By default, the SPFILE (and the init.ora file) is placed in the ORACLE_HOME/dbs directory in UNIX systems and the ORACLE_HOME\database directory in Windows systems. The ORACLE_HOME directory is the standard location for the Oracle executables.

Password File: The password file is an optional file in which you can specify the names of database users who were granted the special SYSDBA or SYSOPER administrative privileges, which enable them to perform privileged operations, such as starting, stopping, backing up, and recovering databases.

Understanding of oracle database architecture plays an important role to become a good DBA.

ReplyDeleteIt provides me great start towards DBA.. Keep continue posting!!

ReplyDeleteExcellent blog I visit this blog it's really awesome. oracle training in chennai

ReplyDeleteLearn Python Development for excellent job opportunities from Infycle Technologies, the best Python training institute in Chennai. Infycle Technologies gives the most trustworthy Python training in Chennai, with full hands-on practical sessions from professional trainers in the field. The job placement interviews will be arranged for the candidates to meet the job interviews without missing them. Transform your career to the next level by calling 7502633633 to Infycle Technologies and grab a free demo to know more.Best Python Training in Chennai | Infycle Technologies

ReplyDelete